Metacogniton has been an area of professional interest following a morning conversation with Professor Daniel Muijs at Embley Hampshire in 2018. It was about the time the EEF release the Metacogition and Self Regulated Learning Guidence Report. More recently the EEF published the Metacognition and Self Regulation: Evidence Review (May 2020). I remain interested in this topic as it relates to Successive Relearning and RememberMore.

Why am I interested in Metacognition?

Metacognition is learning. It is a central tenet or ingredient of building student agency. A tool for leveraging greater investment or commitment to learning by the learner. Anyone that has-skin-in-the-game has a deeper, vested interest in the outcome, right? At least that is my perspective.

There is extensive evidence that metacognition is related to attainment, however, that is not the same thing as stating that improving metacognition will lead to improved attainment. That said, is there a more important ability than to know what you know and don’t know? If learners cannot differentiate accurately between what they know and do not know, they can hardly be expected to engage in advanced metacognitive activities such as evaluating their learning, or making plans for effective control of that learning. At its most reductive, it is a question of resource allocation. It is why knowledge monitoring ability (one half of metacognition pie) and academic attainment are moderately correlated. (The other half of the metacognition pie is knowledge control, the regulation of behaviours as a consequence of monitoring.)

Hence, I am often “thinking about thinking” – “thinTHINKINGking.” About my own learning. How I design learning to include metacognition (my teaching practice). What learners are thinking and how do I know? How do I make that thinking visible.

As a teacher, I am thinking about my own learning. How I role model my own learning. How I role model my own reflective practice and metacognition with my classes.

After defining the declarative content (and discarding some content too) I think at length about the thinking I want to elicit. “Learning is the residue of thought.” Only then do I embark on designing and sequencing the learning activities. There is, also a thought to efficiency – as sometimes there is value in pre-teaching content (eg vocabulary) or investing in a routine, to expedient later learning. I am not that concerned with the activity itself, or the strategy, or technique, but rather the thinking it is designed to elicit, and how we will capture and harness that thinking. A lot to do with this approach stems from Ron Ritchhart’s work on Cultures of Thinking. Do connect with Simon Brooks @sbrooks_simon if this resonants.

I think about how I encourage / direct learners to think about their thinking, and how to think about their learning. The importance of metacogitive practice (knowledge / skills) is high on my pedagogy practice agenda and is elevated when connecting with planning: defining and designing meso cycles (cycles within a scheme of learning, 8-12 lessons in English), assessment and feedback.

In this post, we are looking more closely at regulation (knowledge skills) and how learners use these insights (resource allocation), than metacognitive knowledge, what a learner knows about the way they learn or how they learn – as in this scenario the learning is proscribed by the English curriculum and text. Here the regulation is woven into the assessment cycle.

Shortly after the start the term (book setup, introductions, pre-quiz), I share with students the broad macro learning aims of the year and unit (annual exam, termly assessment), then immediate learning aims of meso cycle, before building the micro cycles (macro, meso, micro are terms borrowed from my Sports Scientist training.) Aligned with Leahy et al., (2005) five key strategies” of formative assessment.

2020 Macro, meso and micro

Macro – annual exams, three termly controlled assessments (adjusted pending the curriculum allocation). Marked and moderated. (Termly BIG Quiz – 100 declarative knowledge questions.)

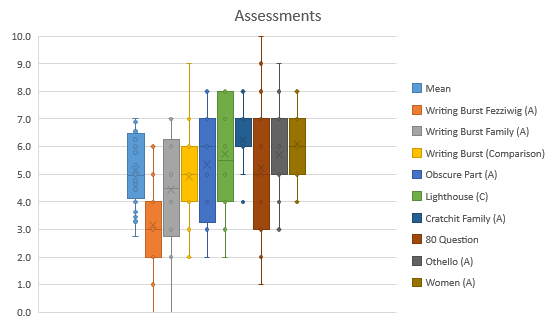

Mesocycle – designed to accomplish a particular goal. Every ten lessons, a “Responsive Teacher Task” or extended assessment piece, supported (success criteria, rubric, examplar,) delivered under semi-controlled conditions. Marked with feed forward. Below 6 A Christmas Carol RRTs (plus two Othello). (A)nalysis or (C)omprehension. A declarative knowledge quiz – which was only 80 questions.

Micro – a lesson or short requence of lessons (1-4). Teach-Adapt-Assess. I assess every lesson, a warm up, cool down. (except Thursdays). Do Now, free-recall retrieval practice with classroom.remembermore.app (5-6 mins), plus mini checks and exit checks. Every Thursday, I assessed and recorded learner performance grade on a pop quiz.

On testing – testing in schools is usually employed diagnostically, undertaken for purposes of assessment, to assign learners grades, or rank or both. Yet tests offer so much more. Roediger, et al., (2011) list ten benefits. I was looking leverage benefit 7 however benefit 1, 2, 4, 9, 10 all contribute.

Forecasting grades

This academic year, I found myself exploring the metacognitive opportunities associated with RememberMore. Specifically, expanding my knowledge and application of one’s own judgement of learning, or forecasting performance.

The act of assessing one’s own judgement of learning is one of the most effective ways to deepen a memory trace.

Sadler (2006)

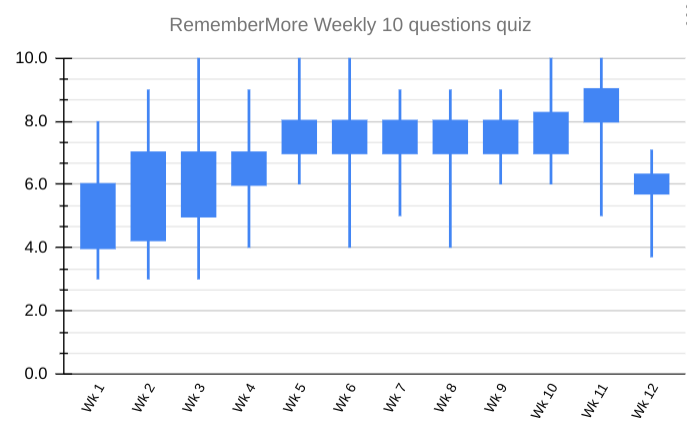

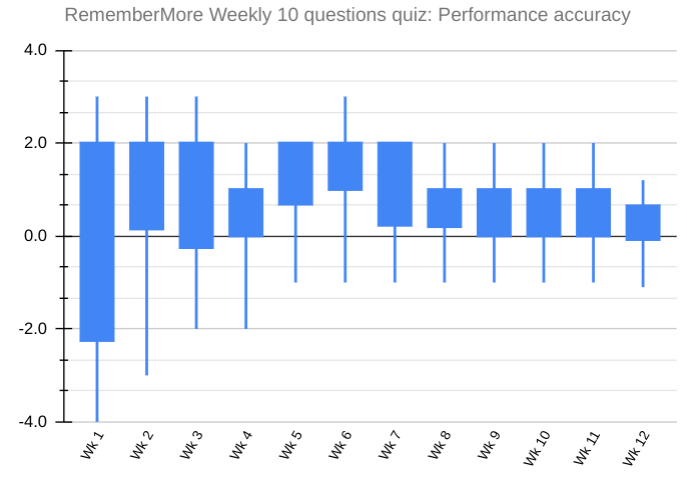

Together with the benefits of testing, the protocol of Successive Relearning (retrieval practice) and establishing routines, I adopted a learn forecast accuracy model with self-assessed marking, low-stakes quizzing (10 question quiz from a deck of 30 cards or 10/30). Two Year 8 mixed prior attainment English classes n=52.

Setting an assessment will often lead to an improvement in performance, even if that assessment does not take place, where students benefit from assessment preparation. However, I added the requirement to forecast one’s own score before taking the quiz, and sharing the expectation that learners be accurate in their assessment.

Protocol

- Step one – students forecast performance out of 10 (from 10/30-40 categorised tagged retrieval prompts – eg Ch02 vocab)

- Step two – set and display retrieval practice from classroom.remembermore.app. – show questions

- Step three – students adjust or confirm forecast result

- Step four – Take quiz – 2 minutes is usually sufficient

- Step four – Present correct responses. Self-assess – check (I try and avoid adjudicating – students mark). 1-2 minutes

- Step five – Students report their performance and accuracy

The conversations that followed – were straightforward and anchored students to their next action.

| Forecast | Accuracy | |

| Low | Low | Very rare – Why such a low forecast? If a positive inaccuracy – no response. (Why did they not know – still a concern.) |

| Low | High | If you knew you were going to under performed, why did you now take action? Very powerful. |

| High | Low | What did you learn from taking the quiz? Very powerful. |

| High | High | Accuracy recognised. How did you know you were going to perform well? Impact on others was palpable. |

What I did not plan, but soon recognised was, we created a virtuous circle. To forecast accurately learners needed to know their performance. To know their performance, learners needed to practice. The more learners practiced, the more accurate AND better scores they achieved.

To be accurate, you need to know your performance. To know your performance, you need to practice.

I still remember, and cherish, the first time Z bold forecast 10. He is not an arrogant or over confident boy. But he knew what he knew. He practice hard. The metrics showed me that, he already knew he was invested. He nailed three ten out ten on the bounce.

Here are 11 weeks quiz scores and performance accuracy for two Year 8 classes and the termly 100 Big Quiz (score divided by 10).

You do not have to be a data scientist to recognise the improving scores, performance forecast and slight over confidence. (RememberMore adjusts for that over-confidence). Similarly, learners tend to be more confident after repeated or massed study, a well reported cognitive bias. Repeated study most likely engenders greater processing fluency, which leads to an overestimation of performance.

On the back of this trial data and the conversations I had with students each Thursday, I will be looking to replicate the study. Should anyone else like to replicate the study, then let me know and we can make RememberMore available.

Leahy, S., Lyon, C., Thompson, M., & Wiliam, D. (2005). Classroom assessment: minute-by-minute and day-by-day. Educational Leadership, 63(3), 18-24.

Roediger H, Putnam A, Smith M, et al. (2011) Ten benefits of testing and their applications to educational practice. In: Mestre J (ed.)

Pingback: Plans, meso-cycles and spirals (part 3) | KristianStill