Each exam boards provides a variety of exams analysis tools that help teachers identify the questions / topics / skills in which students excelled/fell short in. These tools also provide valuable comparative information on the performance of your students / classes / cohorts on individual questions when compared to AQA/Edexcel/OCR/WJEC national averages. Unfortunately, much like an autopsy, this information is of little benefit to the deceased or students past though informative for department development. Could the similar approach be used to aide the current learning in class? Of course? It is very much the Data Driven, PiXL approach. How about we offer our students an academic physical?

The timely collision of QuickKey, (now Validated Learning) @DataEducator (Exam Feedback Tool) with my personal interest in assessment and Data Driven leadership, and the heighten role of quality marking and constructive feedback for outstanding teaching makes me think we can.

…consistently high quality marking and constructive feedback from teachers ensure that pupils make significant and sustained gains in their learning. (Ofsted)

Question Level Analysis (QLAs)

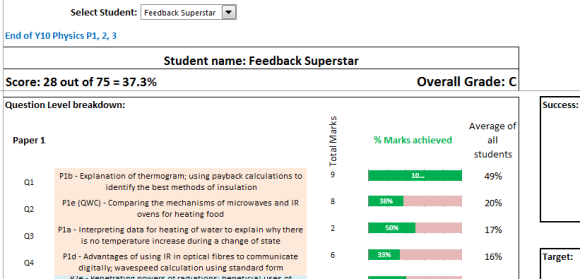

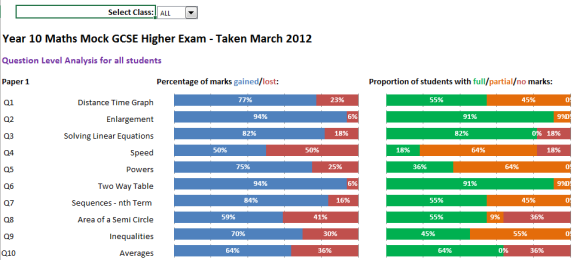

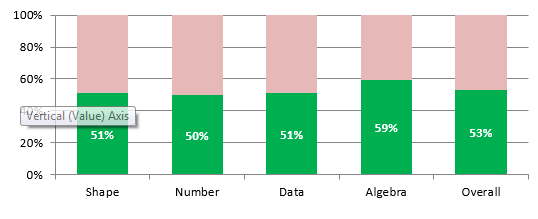

Question level analysis explores and presents the analysis of students responses to individual questions; typically against that of the mean with strengths and areas for improvement identified. In the case of the exam board tools, the mean is all students who sat the exam, in day to day teaching, the year group for core subjects, class, cohort or group of students. Of course, within a school it offers interesting comparative opportunities with teacher input vs assessment outcome the extremely simplified variables, as well as class comparisons when taught be the same teacher. This is tremendously powerful analysis that can easily have an effect on classroom practice and student learning. Correspondingly, from a teachers perspective, which questions were consistently learnt and which questions were best learnt (possibly consistently). Compelling evidence for QLA practice for teaching.

Multiple Choice Questions (MCQs)

I have been reading and learning as much as I can about objective questioning, in particular MCQs. There is a dearth of scholarly material that more often than not supports the humble MCQ and consequently, they are one of the most frequently used assessments modes, particularly when there is a large body of material to be tested and / or a large number of students to be assessed. The other advantage are that MCQs have manageable logistics (discrimination and facility), are easy to administer and can be marked rapidly. There is also a good deal advice on how best to construct your stems, options, distractors and correct or key responses… (and the impact on multiple correct answers) another time perhaps.

The Knowledge Physical

Working with our Curriculum Leader for Science, we started out by been creating focused assessments, transferring the scores to @DataEducator’s Exam Feedback Tool. It would have been even more powerful if the feedback hadn’t identified that almost all students need to broadly improve all/most science skills.

It currently indicates that most students need to work on most of the course covered in Year 9 & 10.

As student performance fractures, we expect the exam feedback tool will pin point specific areas of need, for specific students. The A4 printouts helped students realise just how much they need to cover in order to move up through the grades. (Grade boundaries are fed into the system.)

There were one or two feedback points, extending the topic description boxes and the ability to obtain sub-totals for the sections / or categories of questions within the assessment thought Peter has covered this in a more recent Maths version.

What was most notable, when we applied this diagnostic feedback with sixth formers, they requested very specific homework; questions that addressed their lowest scoring topics.

I asked Paul (Curriculum Leader for Science) about the effort that went into creating the mini assessments which looked very professional with their mix of questions types and scientific diagrams. That is when I learnt about ExamPro An assessment tool or questions database, related mark schemes and examiner comments, all mapped to the current specifications (a range of subjects and more recently, KS3 questions).

Now there is one last piece of the assessment puzzle that has thrust itself front and centre the past week or two, and the reason for the extended interest, QuickKey. Hard copy assessments, even those written in ExamPro, require extensive marking. MCQs (of which there are many in ExamPro) do not, if combined with QuickKey. 30 questions can be comfortably marked in 5 seconds and from what I learning over the weekend, efficiency and accuracy will be improved an a pending update next week.

Road Testing QuickKey

On Thursday morning my colleague and I road tested QuickKey with Y8, on a 10 question grammar test. The results and instant feedback were quite startling.

We gave the students their QuickKey IDs and their answer tickets. We gave students a copy of the graduated quiz (progressively more difficult) and gave them a copy of the questions. As students completed the test, I walked the room, scanning the tickets in an instant, feeding back “ghost style.”

You got 7 correct, find the three you need to improve.

The response from the students was quite startling. They were keen to get their scores, they were shocked at how quickly I could give them their score and eager to see the app at work again. I declined,

Not until you have found the 3 incorrect responses and correct them.

Unfortunately, the assessment environment quickly deteriorated, given the response of the students, their intrigue, disbelief and enthusiasm for instant grading. Students took advantage of the situation and as part of their solution, started comparing their answers tickets to find the incorrect responses. Not an ideal test environment, though we thought that the students conversation had value and turned a blind eye / deaf ear? Instant feedback to 10 questions in 3 seconds, caused a real stir, what about when there is 30 questions? A rescan or override previous score would be a neat new feature – apparently on the planned update list too.

As for the graduated questions – #fail. Question 6 was most difficult?? Question 1, 2, 3, 4, and 7 were all 100%. Seems I will need to spend more time writing MCQs.

Next steps…

In KS5 we will continue to work with the Exam Feedback Tool and ExamPro in Science – maybe encourage Maths and Economics as early adopters.

In KS4 Exam Feedback Tool and ExamPro MCQs in Science (and hopefully pick up a few other interested Curriculum Leaders). Review a new Science platform – Educake. QLA online.

In KS3 Exam Feedback Tool and ExamPro MCQs in Science (and hopefully will pick up a few other interested Curriculum Leaders). We hope to find a way to use QuickKey in English on either the weekly spelling or spelling and grammar quiz.

Chat with Educake who seem to have the idea (and a little more) mapped out online. We would need to find a way to offer offline grading solution for our context?

If you are a geographer and keen to try QuickKey – start here.