Some might think that I have given far too much consideration to the “whys and where fors” of graded lesson observations this past fourteen or so months. If not graded lesson observations, then Y11 pupil academic FFT(D) targets set as performance objectives certainly comes a class second. And with those two opening statements, I can see my two trusted, seniors leader car-pool, edu-junkies, grinning a painful confirmation. Target grades as performance objectives will have to wait, right now let’s get back to graded lesson observations.

Some might think that I have given far too much consideration to the “whys and where fors” of graded lesson observations this past fourteen or so months. If not graded lesson observations, then Y11 pupil academic FFT(D) targets set as performance objectives certainly comes a class second. And with those two opening statements, I can see my two trusted, seniors leader car-pool, edu-junkies, grinning a painful confirmation. Target grades as performance objectives will have to wait, right now let’s get back to graded lesson observations.

Put aside what you know about graded lesson observations for a moment, all that you have read on the CEM Blog, put aside what you read and learnt from the hefty Measures of Effective Teaching Project and acknowledge that graded lessons observations have been one of the key processes and responsibilities for any school leader charged with leading on the ‘Quality of Teaching’ in schools. I am sure you are expecting a conversation on the “grading” of lesson observations, and you won’t be disappointed, but first I am keen to uncover whether or not the school leader with a responsibility for the Quality of Teaching should also lead on Performance Review/Appraisal/Management*? (delete as appropriate). I do not have the answer but you can add you view here. Should the responsibility for Quality of Teaching and Performance Review fall under one line? (How is your leadership team structured?)

My unscientific investigations would suggest most do, and in my limited experience I am finding that where the responsibility for Quality of Teaching and Performance Review falls under different leadership roles, organisational blurring results. There seems to be a kind of organisational strain caused by these two goliaths as they joust for attention and elbow grease of those charged with the operational duties.

As I continued to wrestle with the balance of roles and responsibilities for these two important school processes, the burgeoning discussion between the “notoriety” (those educationalists with influence and right-leaning think tank Policy Exchange) and Ofsted regarding graded Lesson Observation roared on. Amplified by the accelerating adoption of social discussion within the profession and an inexorable thirst for Research, that’s research with a big R, the notoriety were invited to Ofsted HQ. The wheels were in motion, graded lessons observations were, ironically inadequate. And as Tom Sherrington pointed out, even if there was “so much inertia in the system…. make no mistake, the game has changed.”

And for good reason. Pick up, what you put aside. Graded lesson observations were woefully inadequate methods to evaluate teacher effectiveness (perhaps more woeful that Y11 pupil academic FFT(D) targets set as performance objectives – sorry). Professor Coe’s words of caution, sing to the choir of the profession. That if a lesson is judged outstanding, the probability that a second observer would give a different judgment is up to 78%. That if a lesson is judged inadequate, the probability that a second observer would give a different judgment rises to 90%. As shocking as this information is, if nothing else, use it to your advantage. If, on inspection, a lesson is graded Outstanding, do not steer our good-natured HMIs back to confirm that teachers awe inspiring prowess. Whereas, if a lesson was graded 4, get a second HMI in there fast, if only to question the first HMIs commitment to their judgement. Meanwhile, Professor Dylan Wiliam throw a stiff jab with his calculations, noting that we would need to use at least five independent observers, to observe a teacher with at least six different classes, achieve observation judgement reliability of 0.9. Leaving the teaching unions with a precarious dilemma to solve.

In the midst of a growing swell of discontent we were confident that graded lesson observations would no longer carry a crucifying yet unreliable grade. However, we were less sure, whether or not lesson observations themselves, would survive the onslaught (“onslaught” too much of a giveaway?). Hence, like a bereft Lloyd Grossman, we deliberated and cogitated, in a world without lesson observation grades, how might we judge the Quality of Teaching, and to whom would the responsibility of the incoming Performance Related Pay fall? Without warning, like the dealer at the Roulette table, our Principal announced “rien ne va plus,” or “no more bets please.”

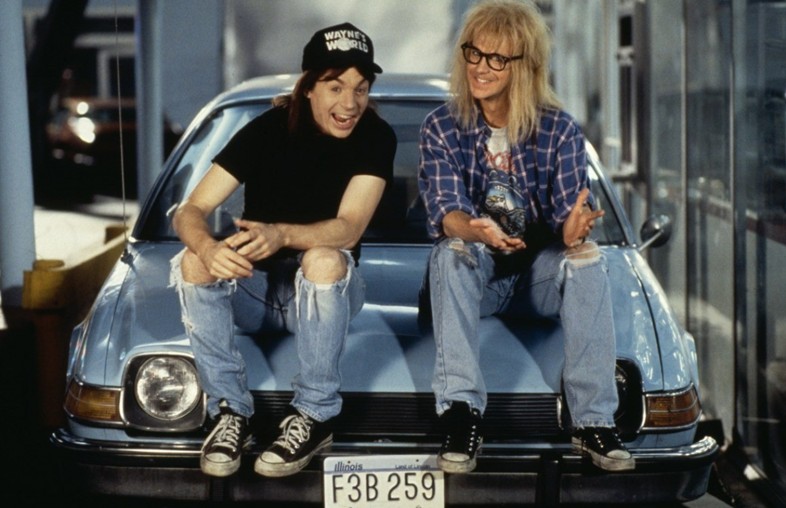

With summer results in and analysed, Ofsted announced that they would be drop grading individual lessons and no longer aggregate scores on lessons in their feedback to the headteachers. We were stunned. Left shocked and aghast. The move, they told us, followed a trial involving some 250 schools in the Midlands area. Which, according to Sir Michael Wilshaw, “proved incredibly popular” – unsurprising, few in the profession wanted to be crucified unreliably. Instead, Ofsted said they would say where teaching was good and where it needed improvement. “Ex-cel-lent. Party-on.” Though following that formal and encouraging announcement, we, like most schools, still had one more unreliable lesson observation cycle scheduled. What is more, a lesson observation grade profile (eg 2/3 Outstanding observations, 2/3 Good lesson observations) that defined in our very first Performance Related Pay decision making process, which we duly (if hesitantly) completed.

The 2013-14 Performance Review cycle was about to close and the new 2014-15 Performance Review was about to be opened. What was to become of the humble graded, internally isolated, lesson observation?

[qr_code_display]